There’s this scene in “Silicon Valley” where Erlich pleads with Richard to focus on improving their startup’s cloud architecture.

“[It’s] just a giant turd that’s clogging up our pipes, we have to call in a plumber to fix it,” he says, describing the cloud as “this tiny, little, shitty area, which… in many ways is the future of computing.”

He wasn’t wrong about that future stuff…

… and not far off on the plumber either

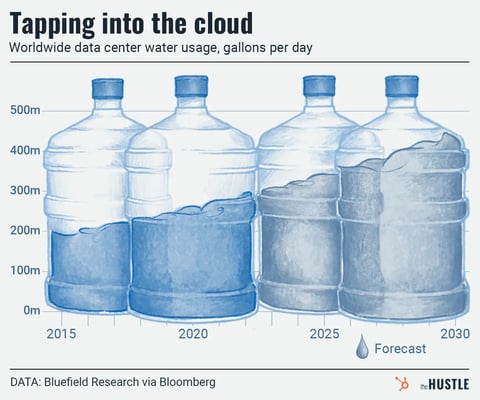

Globally, data centers are forecast to consume 450m gallons of water daily by 2030, up from ~205m in 2016, according to data reviewed by Bloomberg.

This is particularly worrisome for drought-stricken farming regions like Spain’s Talavera de la Reina, where a $1.1B Meta-planned data facility could gulp 176m gallons annually.

- The project is expected to create 250 permanent jobs, and Meta says it will restore water, though it’s unclear where or how.

It’s a global issue, accelerated by AI

Data center operators need lots of energy to make the web run. As drought worsens worldwide, this issue has become a point of contention everywhere from Chile, to the US, to the Netherlands.

- Part of the concern centers around transparency, with only 39% of data centers measuring their water usage last year, according to one group.

Another challenge: Demand for computing power is accelerating faster than sustainability efforts. (Yes, AI, we’re talking about you.)

Want to imagine the impact in terrifying, everyday terms? We’ve got you covered — researchers have estimated that a 20-question convo with ChatGPT equates to ~500 milliliters of water use, about the size of a water bottle.

*Slowly closes ChatGPT browser tab, 600 questions later…*