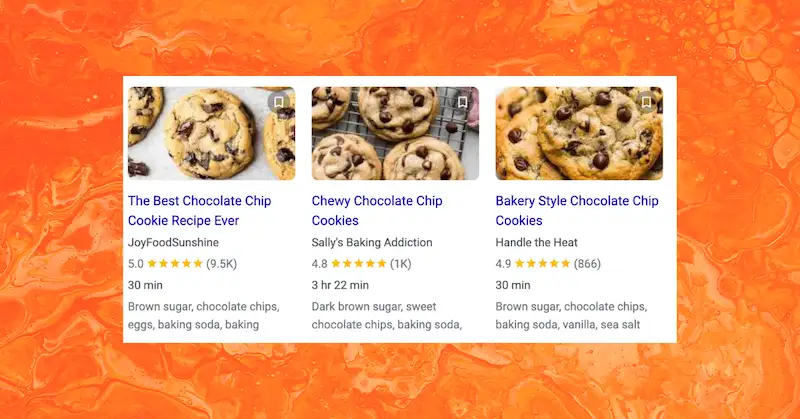

For a while now, Google has offered far more than the “ten blue links” they started with, including images, videos, maps, shopping, and other filters.

At its recent Search On event, the company outlined the next frontier of search, which they expect will bring these formats together to give more detailed answers.

Machine learning will play a crucial role

Google’s Multitask Unified Model (MUM) will help add context to deliver more comprehensive results. Some examples include:

- Offering “Things to know” boxes about a given search, pointing to different subtopics to explore

- Returning subsections of videos that relate to a given search, even if the entire video doesn’t

- Showing available inventory at local stores for shopping-related searches

While this new level of granularity could make Google Search even better, there is a catch.

To get better outputs, users need to give Google more inputs

One way Google is hoping this will happen is through increased usage of Google Lens, its image recognition software that allows users to search for items through their camera. To do so, Google is making Lens easier to find and use by:

- Building Lens functionality into the Google app on iOS

- Adding Lens to the Chrome browser on desktops

By collecting more searches across text, images, and videos, Google believes it will be able to help users find exactly what they need.

But there are downsides

With higher expectations, Google will be under more pressure to deliver the perfect search results.

This dynamic — previously referred to as the “one true answer” problem — opens the door to criticism about biases, which is especially complicated in the context of recent controversy around the company’s AI biases.

On a brighter note, check out some of the cool things you can do with Google Lens.