Photo: Xiaolu Chu/Getty Images

Autonomous driving may be the way of the future, but it just hit a speed bump.

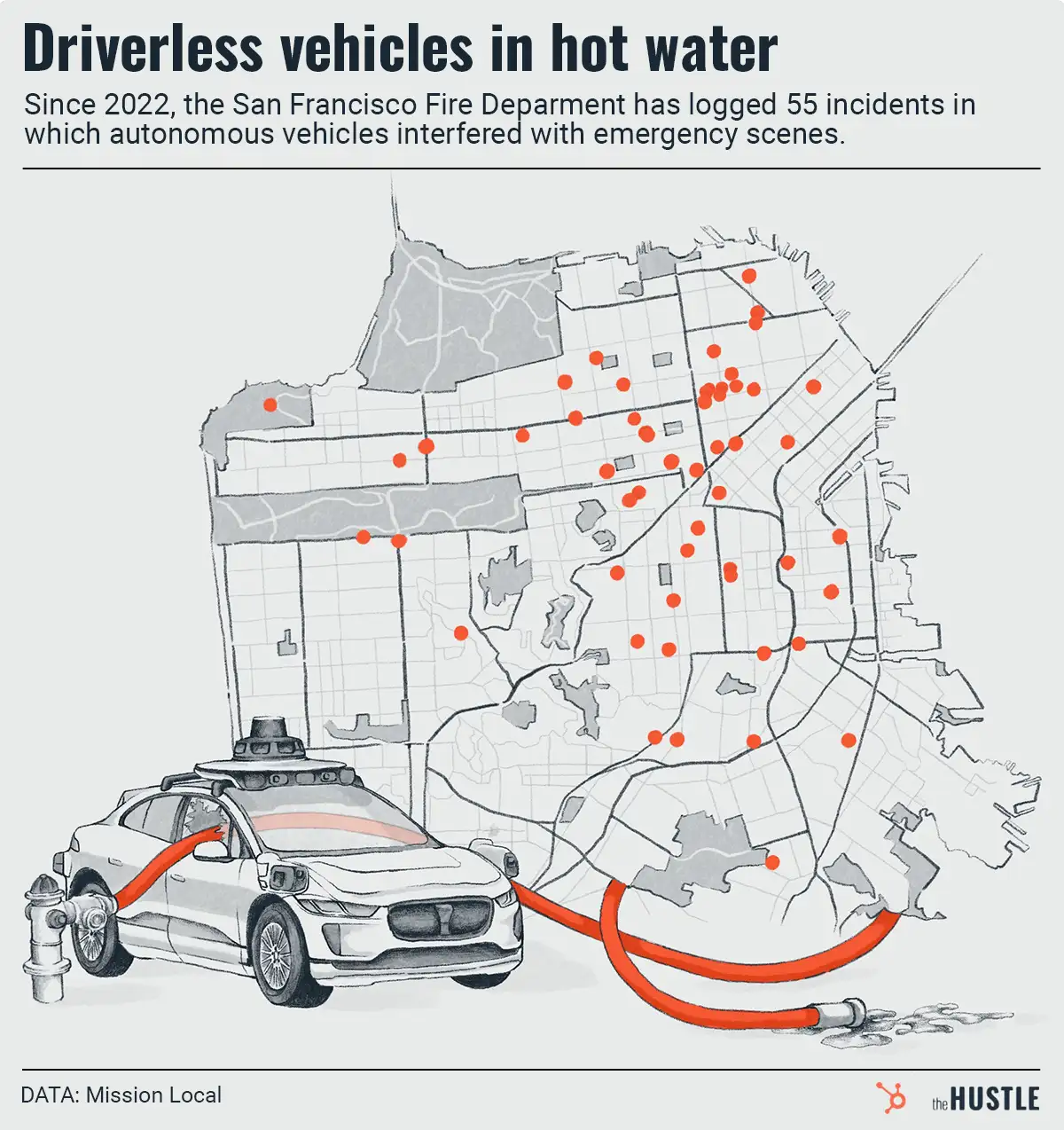

The National Highway Traffic Safety Administration (NHTSA) opened a formal investigation into Tesla’s autopilot technology, which:

- Covers 765k vehicles, spanning the company’s current models back to 2014.

- Has sparked 11 accidents since 2018 in which Tesla drivers using autopilot crashed into emergency vehicles.

Tesla’s autopilot technology is powered by a neural network…

… that is trained on a library of thousands of images.

Each vehicle is equipped with cameras that monitor its surroundings and feed the neural network, which checks the library to determine if obstacles are in its path.

The system performs well in most situations but only identifies patterns it’s been trained on and has repeatedly struggled with:

- Parked emergency vehicles

- Large perpendicular trucks in its path

The bigger issue is that drivers expect the autopilot system to do all the work

While Tesla warns that drivers must be ready to take the wheel at any time, regulators claim the company is misleading customers with marketing material that makes it seem like the technology works on its own.

Tesla vehicles measure pressure on the steering wheel to ensure drivers are attentive, but drivers can game the system using weights or water bottles to simulate hands on the steering wheel.

This has led to unsafe situations, including:

- Drunk driving

- Drivers riding in the back seat with no one behind the wheel

Regulators say a major focus of the investigation will be on how the vehicle monitors and enforces driver engagement.

Musk still argues autopilot is 10x safer than the average vehicle

But experts claim his statistics are misleading.

An investigation could lead to a recall or other action by the NHTSA, which could be a serious pothole for Musk and Co.