There are 1B+ iPhone users in the world.

Unfortunately, a percentage of those users traffic in child pornography (AKA CSAM, or child sexual abuse material).

Last week, Apple made a big move to stop the spread of CSAM.

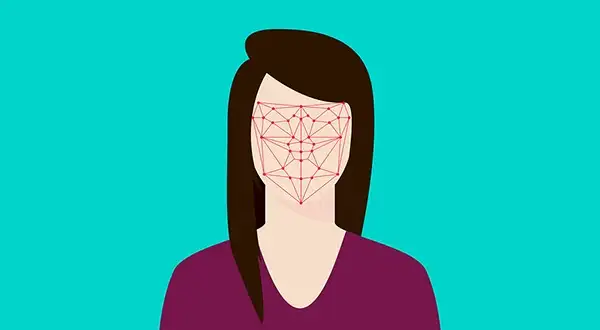

Apple will scan user iPhones…

… for illicit photos, as reported by Daring Fireball’s John Gruber. Here is a simplified breakdown of the steps:

- Apple has a database of CSAM from the National Center for Missing and Exploited Children (NCMEC)

- Apple compares this database to encrypted photos uploaded to iCloud

- If the minimum threshold of potential CSAM photos is not matched, Apple won’t decrypt the photos and there is no further action

- If a minimum threshold of potential CSAM photos are matched, the account is flagged

- At this point, a human will inspect the decrypted photos (e.g., it could be parents uploading lots of photos of their newborn)

Here is the conundrum

While combating CSAM is the noblest of goals, not everyone agrees with Apple’s unilateral approach.

Will Cathcart — the head of Facebook’s WhatsApp, which reported 400k+ CSAM cases last year — says Apple built a “surveillance system that could very easily be used to scan private content for anything they or a government decides it wants to control.”

For example: China could ask Apple to flag all users that have photos of Tiananmen Square.

Apple holds itself as the paragon of privacy

Tim Cook has even said privacy is a “human right.”

Can it maintain this promise with the new CSAM initiative? To further clarify its position, Apple put out a 6-page FAQ on Monday (in it, Apple says it will “refuse government demands” to track non-CSAM images).

With the seriousness of this matter — from all angles — expect many more updates.